hadoop

入门

https://www.alexjf.net/blog/distributed-systems/hadoop-yarn-installation-definitive-guide/

https://mirrors.tuna.tsinghua.edu.cn/apache/hadoop/common/

https://mirrors.tuna.tsinghua.edu.cn/apache/hadoop/common/hadoop-2.7.7/hadoop-2.7.7.tar.gz

http://192.168.1.5/chfs/shared/share/jdk8/jdk-8u231-linux-x64.tar.gz

cat << 'EOF' >> /etc/profile

export JAVA_HOME=/opt/jdk1.8.0_231

export JRE_HOME=$JAVA_HOME/jre

export CLASSPATH=$JAVA_HOME/lib:$JRE_HOME/lib:$CLASSPATH

export PATH=$JAVA_HOME/bin:$JRE_HOME/bin:$PATH

EOF

source /etc/profile; jps; java -version

https://hadoop.apache.org/docs/stable/hadoop-project-dist/hadoop-common/SingleCluster.html

http://192.168.1.5/chfs/shared/share/hadoop-3.2.1.tar.gz

cp -a etc/hadoop/hadoop-env.sh etc/hadoop/hadoop-env.sh.bak

egrep -v "^$|^#" etc/hadoop/hadoop-env.sh.bak > etc/hadoop/hadoop-env.sh

[root@node1 hadoop-3.2.1]# cat etc/hadoop/hadoop-env.sh

export HADOOP_OS_TYPE=${HADOOP_OS_TYPE:-$(uname -s)}

export JAVA_HOME=/opt/jdk1.8.0_231

[root@node1 hadoop-3.2.1]# bin/hadoop version

Hadoop 3.2.1

Source code repository https://gitbox.apache.org/repos/asf/hadoop.git -r b3cbbb467e22ea829b3808f4b7b01d07e0bf3842

Compiled by rohithsharmaks on 2019-09-10T15:56Z

Compiled with protoc 2.5.0

From source with checksum 776eaf9eee9c0ffc370bcbc1888737

This command was run using /as4k/hadoop-3.2.1/share/hadoop/common/hadoop-common-3.2.1.jar

http://192.168.1.5/chfs/shared/share/hadoop-2.7.7.tar.gz

HADOOP_HOME=/as4k/hadoop-2.7.7

export PATH=$HADOOP_HOME/bin:$PATH

[root@node1 hadoop-2.7.7]# cat etc/hadoop/core-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://node1:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/as4k/hadoop27-data</value>

</property>

</configuration>

mkdir -p /as4k/hadoop27-data

[root@node1 hadoop-2.7.7]# cat etc/hadoop/hdfs-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>node2:50090</value>

</property>

<property>

<name>dfs.namenode.secondary.https-address</name>

<value>node2:50091</value>

</property>

</configuration>

[root@node1 hadoop]# cat masters

node1

[root@node1 hadoop]# cat slaves

node1

node2

[root@node1 hadoop]#

hdfs namenode -format

[root@node1 sbin]# ./start-dfs.sh

Java API 操作

https://blog.csdn.net/worldchinalee/article/details/80974544?utm_source=blogxgwz4

org.apache.hadoop.security.AccessControlException: org.apache.hadoop.security .AccessControlException: Permission denied: user=Administrator, access=WRITE, inode="hadoop": hadoop:supergroup:rwxr-xr-x

HDFS 基础命令

[root@node1 ~]# hdfs dfs -help put

[root@node1 ~]# echo hello > hello.txt

[root@node1 ~]# hdfs dfs -put hello.txt /

[root@node1 ~]# hdfs dfs -ls /

Found 2 items

-rw-r--r-- 1 root supergroup 6 2019-12-09 06:51 /hello.txt

drwxr-xr-x - root supergroup 0 2019-12-09 06:47 /test

[root@node1 ~]# hdfs dfs -cat /hello.txt

hello

[root@node1 ~]# hdfs dfs -cat hello.txt

cat: `hello.txt': No such file or directory

[root@node1 ~]# hdfs dfs -cat /hello.txt

hello

[root@node1 hadoop-3.2.1]# hadoop fs -help mkdir

-mkdir [-p] <path> ... :

Create a directory in specified location.

-p Do not fail if the directory already exists

[root@node1 hadoop-3.2.1]#

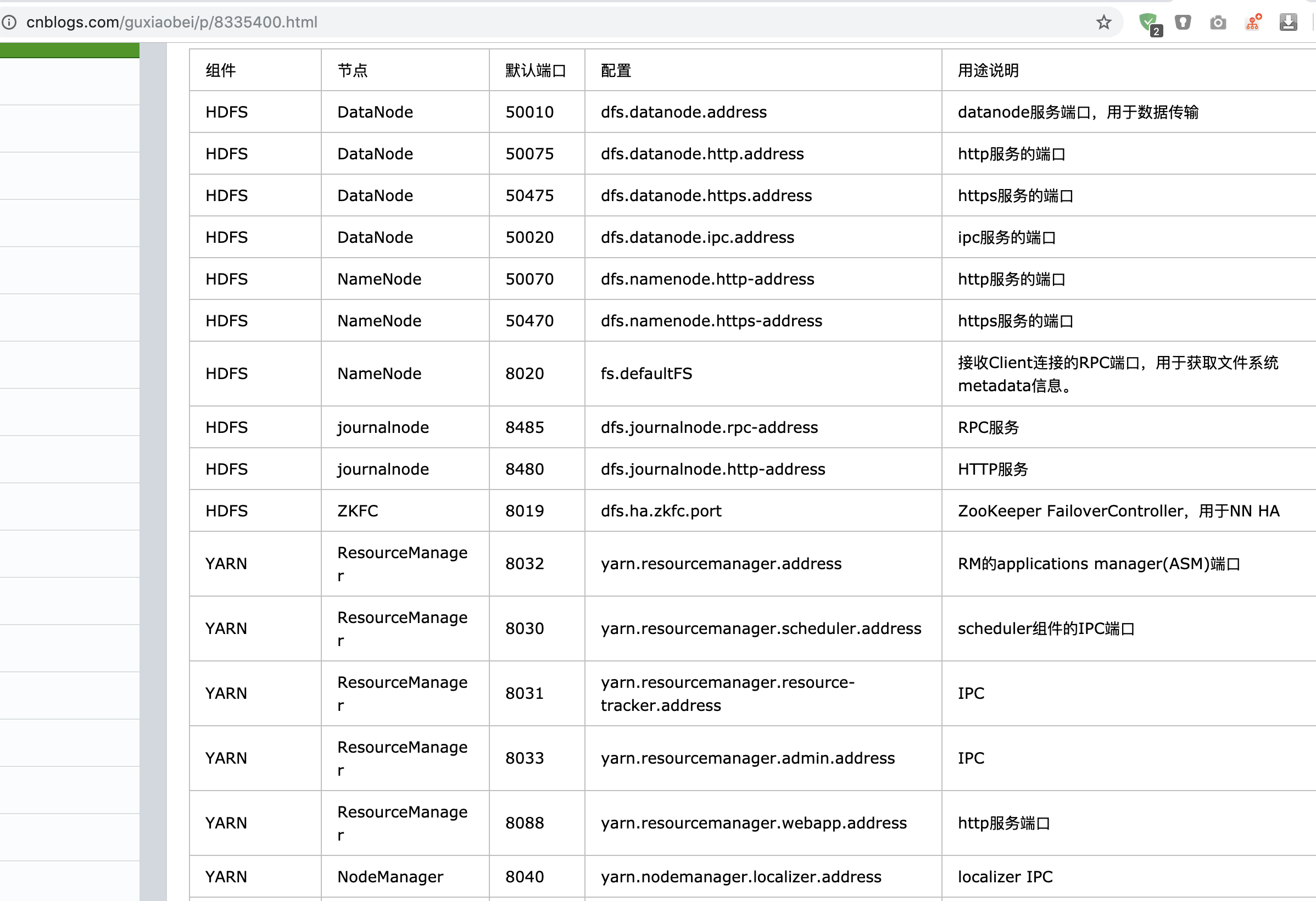

hadoop 常用端口 及模块介绍

https://www.cnblogs.com/guxiaobei/p/8335400.html

HDFS完全分布式安装-3.2.1

cp -a etc/hadoop/hadoop-env.sh etc/hadoop/hadoop-env.sh.bak

egrep -v "^$|^#" etc/hadoop/hadoop-env.sh.bak > etc/hadoop/hadoop-env.sh

[root@node1 hadoop-3.2.1]# cat etc/hadoop/hadoop-env.sh

export HADOOP_OS_TYPE=${HADOOP_OS_TYPE:-$(uname -s)}

export JAVA_HOME=/opt/jdk1.8.0_231

export HDFS_NAMENODE_USER=root

export HDFS_DATANODE_USER=root

export HDFS_SECONDARYNAMENODE_USER=root

rm -rf /xdata/hadoop32-data; mkdir -p /xdata/hadoop32-data

cat etc/hadoop/core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://192.168.1.118:9820</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/xdata/hadoop32-data</value>

</property>

</configuration>

cat etc/hadoop/hdfs-site.xml

<configuration>

<!-- <property>

<name>dfs.replication</name>

<value>1</value>

</property> -->

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>node2:9868</value>

</property>

<!-- <property>

<name>dfs.namenode.secondary.https-address</name>

<value>node2:50091</value>

</property> -->

</configuration>

cat etc/hadoop/workers

node1

node2

node3

scp -rp etc/ root@node2:/as4k/hadoop-3.2.1

scp -rp etc/ root@node3:/as4k/hadoop-3.2.1

hdfs namenode -format (三台机器都执行)

cd /as4k/hadoop-3.2.1; bash sbin/start-dfs.sh

http://node1:9870/dfshealth.html#tab-overview

MapReduce

hadoop fs -mkdir -p /user/joe/wordcount/input/

hadoop fs -mkdir -p /user/joe/wordcount/output (不能提前创建目录,会报错)

[root@node1 hadoop-3.2.1]# echo "Hello World Bye World" > file01

[root@node1 hadoop-3.2.1]# echo "Hello Hadoop Goodbye Hadoop" >> file02

hadoop fs -put file01 file02 /user/joe/wordcount/input/

hadoop fs -cat /user/joe/wordcount/input/file01

hadoop fs -cat /user/joe/wordcount/input/file02

bin/hadoop jar wc.jar WordCount /user/joe/wordcount/input /user/joe/wordcount/output2

bin/hadoop fs -ls /user/joe/wordcount/output2

[root@node1 hadoop-3.2.1]# bin/hadoop fs -ls /user/joe/wordcount/output2

Found 2 items

-rw-r--r-- 3 root supergroup 0 2019-12-15 22:36 /user/joe/wordcount/output2/_SUCCESS

-rw-r--r-- 3 root supergroup 41 2019-12-15 22:36 /user/joe/wordcount/output2/part-r-00000

[root@node1 hadoop-3.2.1]# bin/hadoop fs -cat /user/joe/wordcount/output2/part-r-00000

2019-12-15 22:37:14,839 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

Bye 1

Goodbye 1

Hadoop 2

Hello 2

World 2

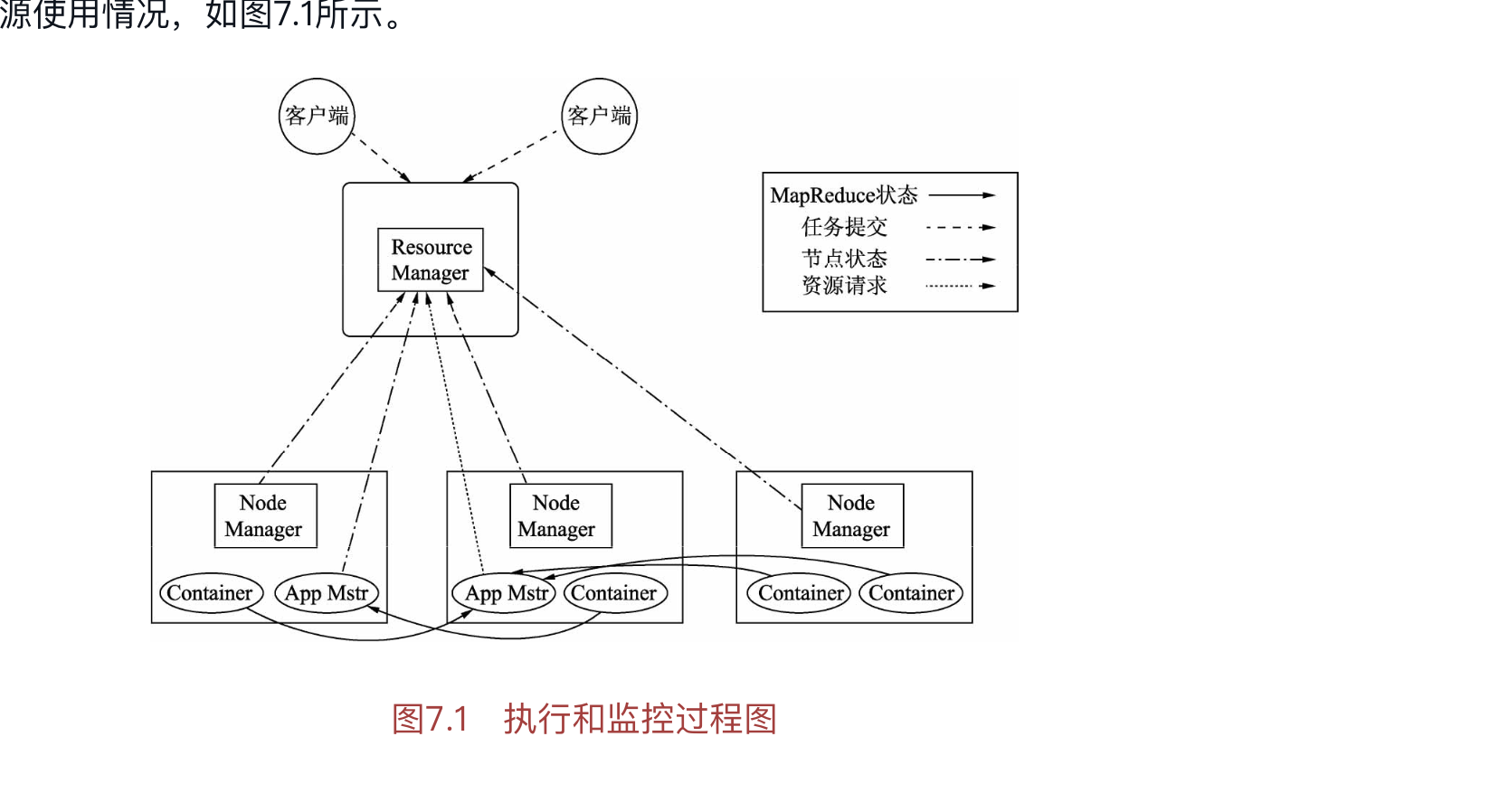

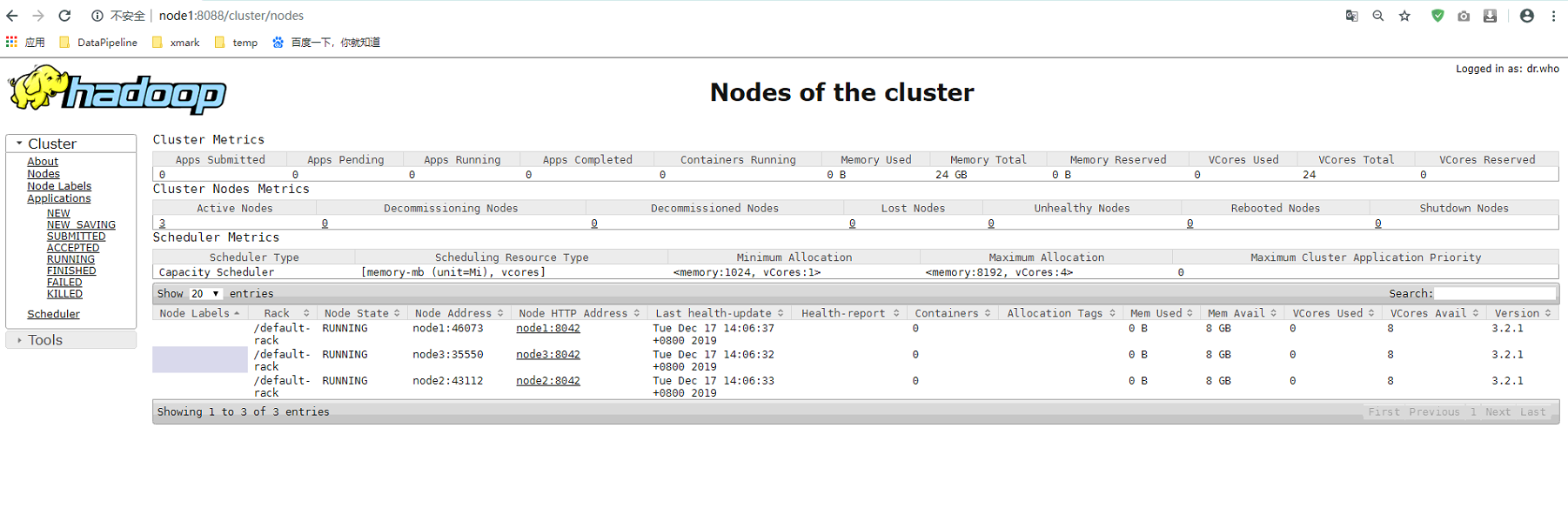

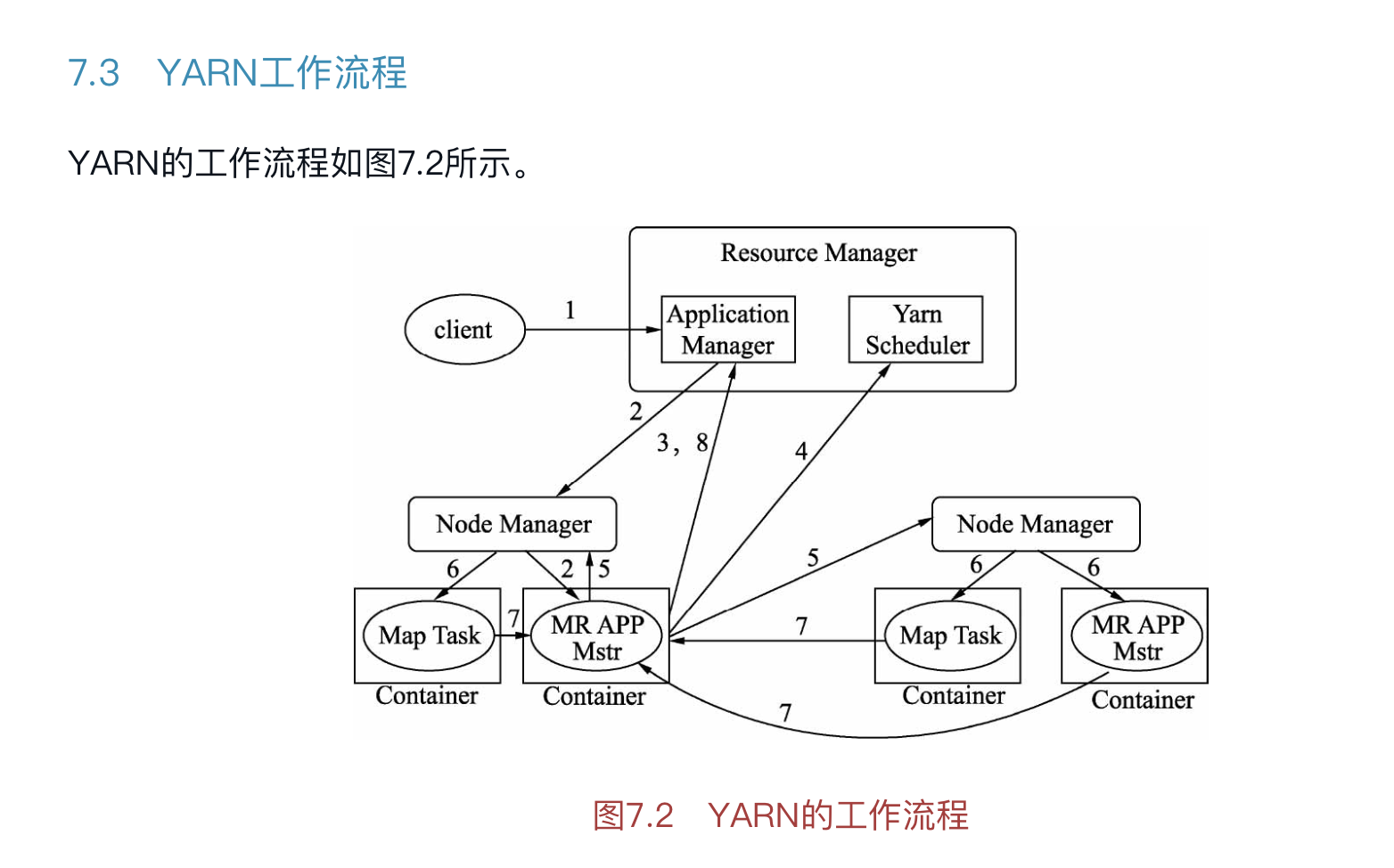

YARN

MapReduce V2

YARN Yet Another Resource Negotiator

ResourceManager

Scheduler

ApplicationManager

ApplicationMaster

NodeManager

https://www.cnblogs.com/liuys635/p/11069313.html

yarn xml

mapred-size.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarm</value>

</property>

</configuration>

yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>node1:8031</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>node1:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>node1:8034</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>node1:8088</value>

</property>

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<property>

<name>yarn.log.server.url</name>

<value>http://node1:19888/jobhistory/logs</value>

</property>

</configuration>